Azure AI ¶

LUIS¶

Language Understanding (LUIS) is a cloud-based conversational AI service that applies custom machine-learning intelligence to a user's conversational, natural language text to predict overall meaning, and pull out relevant, detailed information. It allows users to interact with your applications, bots, and IoT devices by using natural language.

As a part of Azure Cognitive Services, LUIS enables your bot to understand natural language by identifying user intents and entities.

QnA Maker¶

QnA Maker is a cloud-based Natural Language Processing (NLP) service that allows you to create a natural conversational layer over your data. It is used to find the most appropriate answer for any input from your custom Knowledge Base (KB) of information.

QnA Maker is commonly used to build conversational client applications, which include social media applications, chat bots, and speech-enabled desktop applications. QnA Maker doesn't store customer data. All customer data (question answers and chat logs) is stored in the region the customer deploys the dependent service instances in.

Text Analytics¶

Text Analytics: Mine insights in unstructured text using natural language processing (NLP) where no machine learning expertise required. Gain a deeper understanding of customer opinions with sentiment analysis.

Dispatch¶

Dispatch uses sample utterances for each of your bot's different tasks (LUIS, QnA Maker, or custom), and builds a model that can be used to properly route your user's request to the right task, even across multiple bots.

Anomaly Detector¶

Anomaly Detector is an AI service with a set of APIs, which enables you to monitor and detect anomalies in your time series data with little machine learning (ML) knowledge, either batch validation or real-time inference

With Anomaly Detector, you can either detect anomalies in one variable using Univariate Anomaly Detector, or detect anomalies in multiple variables with Multivariate Anomaly Detector.

Direct Line Speech¶

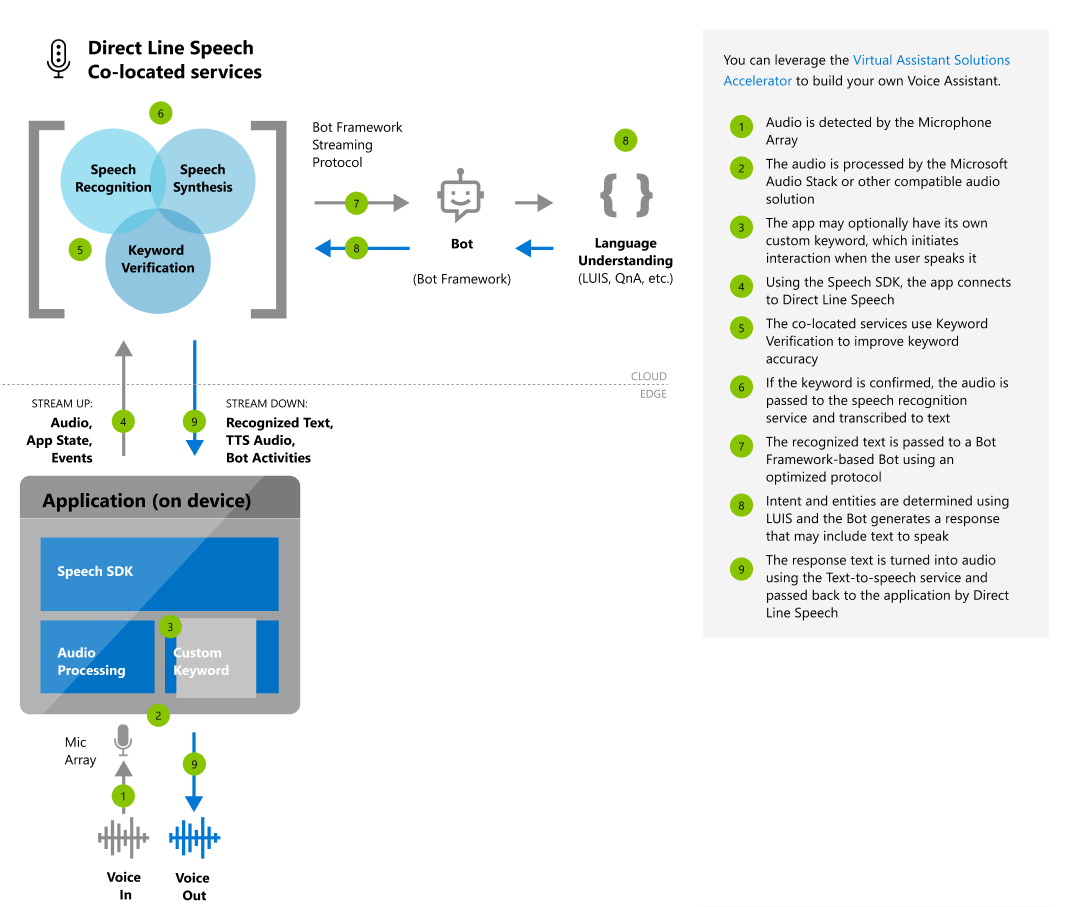

Direct Line Speech is a robust, end-to-end solution for creating a flexible, extensible voice assistant. It is powered by the Bot Framework and its Direct Line Speech channel, that is optimized for voice-in, voice-out interaction with bots.

Azure Data Catalog¶

Azure Data Catalog is a fully managed cloud service that lets users discover the data sources they need and understand the data sources they find. At the same time, Data Catalog helps organizations get more value from their existing investments.

With Data Catalog, any user (analyst, data scientist, or developer) can discover, understand, and consume data sources in their data landscape. Data Catalog includes a crowdsourcing model of metadata and annotations, so everyone can contribute to making data discoverable and useable. It's a single, central place for all of an organization's users to contribute their knowledge and build a community and culture of data

Azure AI Video Indexer¶

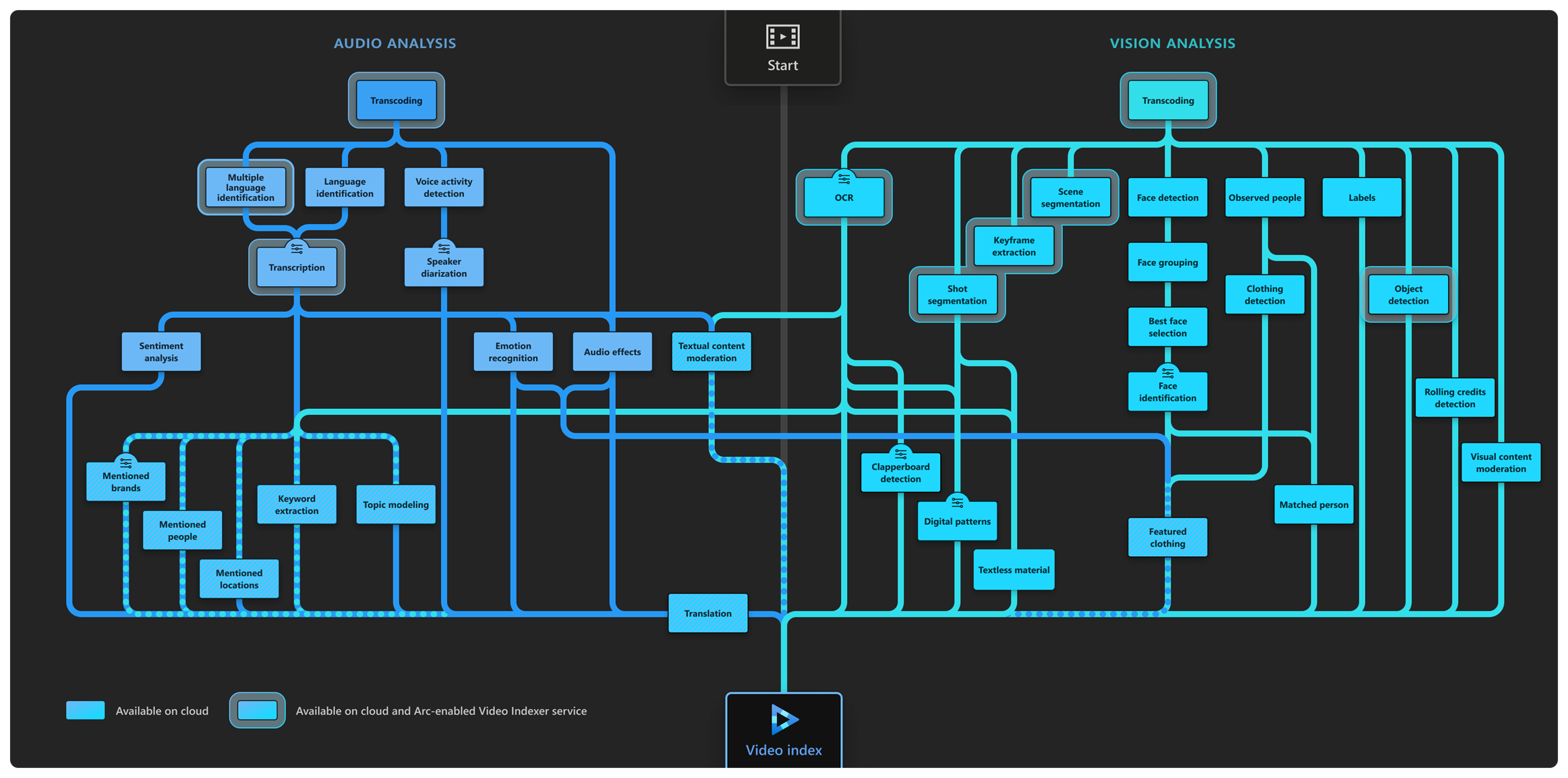

Azure AI Video Indexer is a cloud application, part of Azure AI services, built on Azure Media Services and Azure AI services (such as the Face, Translator, Azure AI Vision, and Speech). It enables you to extract the insights from your videos using Azure AI Video Indexer video and audio models.

Azure AI Video Indexer analyzes the video and audio content by running 30+ AI models, generating rich insights. Here is an illustration of the audio and video analysis performed by Azure AI Video Indexer in the background:

Named Entity Recognition (NER)¶

Named Entity Recognition (NER) is one of the features offered by Azure Cognitive Service for Language, a collection of machine learning and AI algorithms in the cloud for developing intelligent applications that involve written language. The NER feature can identify and categorize entities in unstructured text. For example: people, places, organizations, and quantities.

KB¶

Projections are the component of a knowledge store definition that determines where AI enriched content is stored in Azure Storage. Projections determine the type, quantity, and composition of the data structures containing your content.

Source and Destination of Projection

All projections have source and destination properties. The source is always internal content from an enrichment tree created during skillset execution. The destination is the name and type of an external object that's created and populated in Azure Storage.

Except for file projections, which only accept binary images, the source must be:

- Valid JSON

- A path to a node in the enrichment tree (for example, "source": "/document/objectprojection")