Prompt Engineering 🎹¶

Best practices¶

-

Be precise in saying what to do (write, summarize, extract information).

-

Avoid saying what

not to doand saywhat to do instead -

Be specific: instead of saying “in a few sentences”, say “in 2–3 sentences”.

-

Add tags or delimiters to structurize the prompt.

-

Ask for a structured output (JSON. HTML) if needed.

-

Ask the model to verify whether the conditions are satisfied (e.g. “if you do not know the answer. say “No information”).

-

Ask a model to first explain and then provide the answer (otherwise a model may try to justify an incorrect answer).

Single Prompting¶

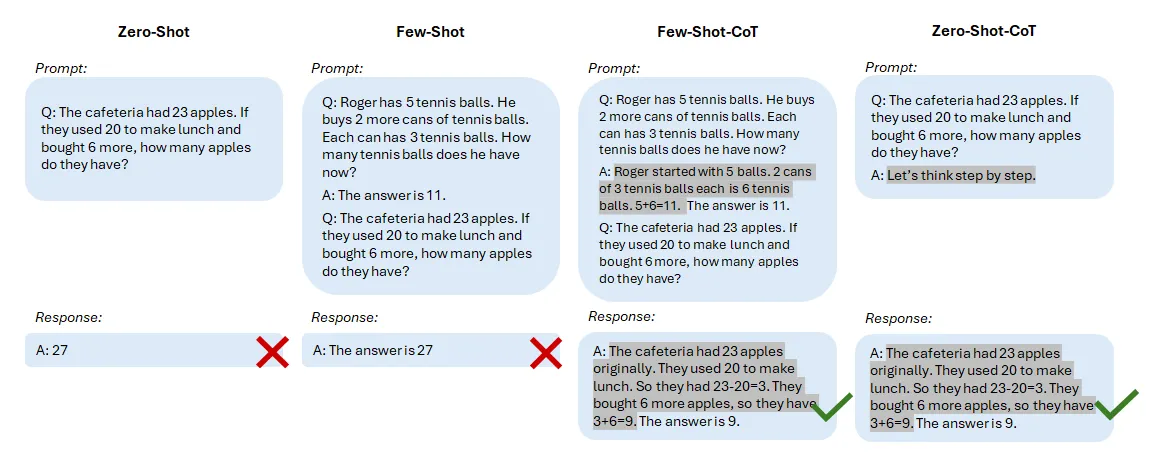

Zero-Shot Learning 0️⃣¶

This involves giving the AI a task without any prior examples. You describe what you want in detail, assuming the AI has no prior knowledge of the task.

One-Shot Learning 1️⃣¶

You provide one example along with your prompt. This helps the AI understand the context or format you’re expecting.

Few-Shot Prompting 💉¶

This involves providing a few examples (usually 2–5) to help the AI understand the pattern or style of the response you’re looking for.

It is definitely more computationally expensive as you’ll be including more input tokens

Chain of Thought Prompting 🧠¶

Chain-of-thought (CoT) prompting is an approach where the model is prompted to articulate its reasoning process. CoT is used either with zero-shot or few-shot learning. The idea of Zero-shot CoT is to suggest a model to think step by step in order to come to the solution.

Tip

In the context of using CoTs for LLM judges, it involves including detailed evaluation steps in the prompt instead of vague, high-level criteria to help a judge LLM perform more accurate and reliable evaluations.

Iterative Prompting 🔂¶

This is a process where you refine your prompt based on the outputs you get, slowly guiding the AI to the desired answer or style of answer.

Negative Prompting ⛔️¶

In this method, you tell the AI what not to do. For instance, you might specify that you don’t want a certain type of content in the response.

Hybrid Prompting 🚀¶

Combining different methods, like few-shot with chain-of-thought, to get more precise or creative outputs.

Prompt Chaining ⛓️💥¶

Breaking down a complex task into smaller prompts and then chaining the outputs together to form a final response.

Multiple Prompting¶

Voting: Self Consistancy 🗳️¶

Divide n Conquer Prompting ⌹¶

The Divide-and-Conquer Prompting in Large Language Models Paper paper proposes a "Divide-and-Conquer" (D&C) program to guide large language models (LLMs) in solving complex problems. The key idea is to break down a problem into smaller, more manageable sub-problems that can be solved individually before combining the results.

The D&C program consists of three main components:

-

Problem Decomposer: This module takes a complex problem and divides it into a series of smaller, more focused sub-problems.

-

Sub-Problem Solver: This component uses the LLM to solve each of the sub-problems generated by the Problem Decomposer.

-

Solution Composer: The final module combines the solutions to the sub-problems to arrive at the overall solution to the original complex problem.

The researchers evaluate their D&C approach on a range of tasks, including introductory computer science problems and other multi-step reasoning challenges. They find that the D&C program consistently outperforms standard LLM-based approaches, particularly on more complex problems that require structured reasoning and problem-solving skills.

External tools¶

RAG 🧮¶

Checkout Rag Types blog post for more info

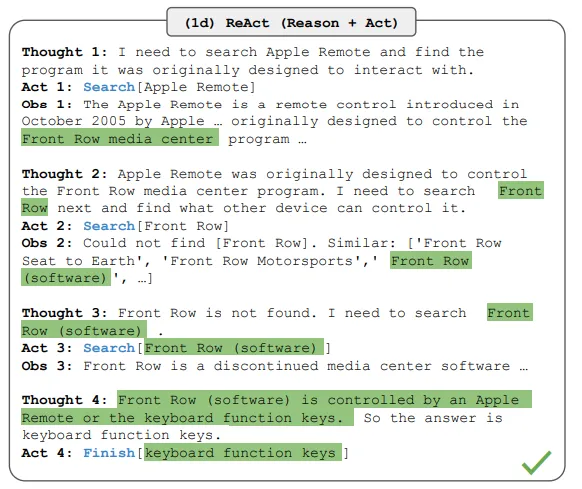

ReAct 🧩¶

Yao et al. 2022 introduced a framework named ReAct where LLMs are used to generate both reasoning traces and task-specific actions in an interleaved manner: reasoning traces help the model induce, track, and update action plans as well as handle exceptions, while actions allow it to interface with and gather additional information from external sources such as knowledge bases or environments.

ReAct framework can select one of the available tools (such as Search engine, calculator, SQL agent), apply it and analyze the result to decide on the next action.

What problem ReAct solves?

ReAct overcomes prevalent issues of hallucination and error propagation in chain-of-thought reasoning by interacting with a simple Wikipedia API, and generating human-like task-solving trajectories that are more interpretable than baselines without reasoning traces (Yao et al. (2022)).